Monocular Depth Estimation via Deep Structured Models with Ordinal Constraints

- Daniel Ron1,2

- Kun Duan3

- Chongyang Ma3

- Ning Xu3

- Shenlong Wang4

- Sumant Hanumante3

- Dhritiman Sagar3

- 1CMU

- 2Amazon

- 3Snap Inc.

- 4University of Toronto

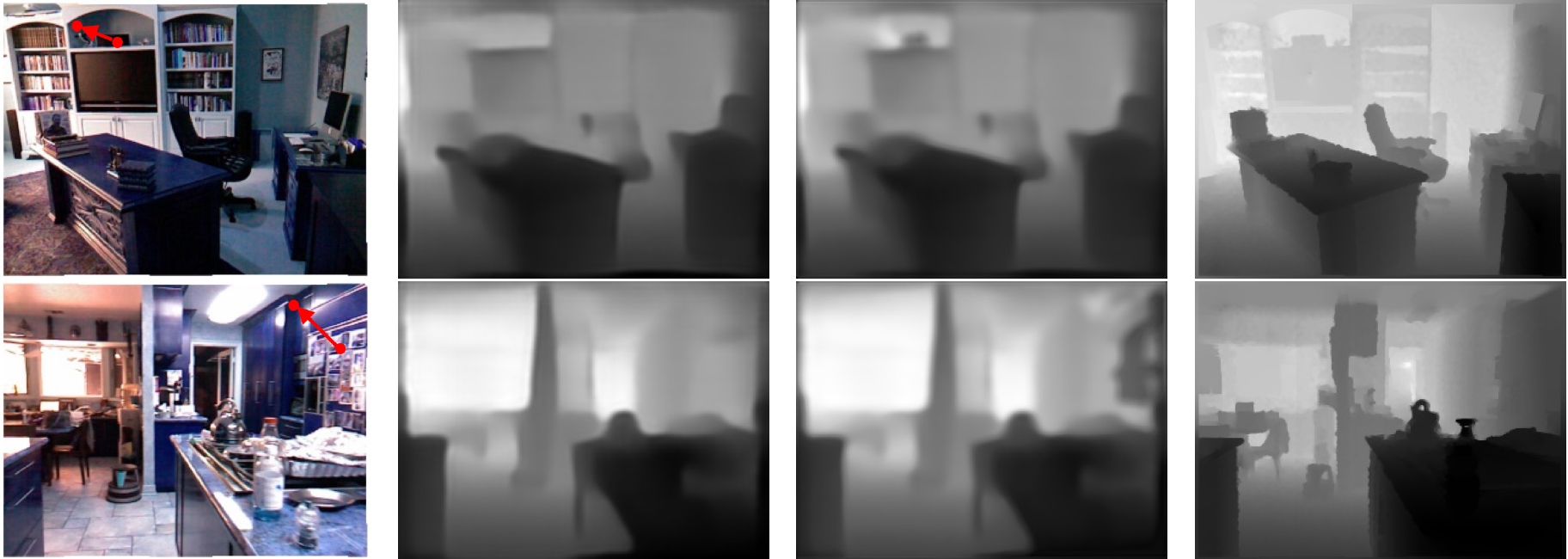

Depth estimation results on NYU Depth V2 dataset. From left to right: input images with ordinal constraints visualized as red arrows pointing from a closer click to another further point; results from the FCRN base network; our refined results; ground-truth.

Abstract

User interaction provides useful information for solving challenging computer vision problems in practice. In this paper, we show that a very limited number of user clicks could greatly boost monocular depth estimation performance and overcome monocular ambiguities. We formulate this task as a deep structured model, in which the structured pixel-wise depth estimation has ordinal constraints introduced by user clicks. We show that the inference of the proposed model could be efficiently solved through a feed-forward network. We demonstrate the effectiveness of the proposed model on NYU Depth V2 and Stanford 2D-3D datasets. On both datasets, we achieve state-of-the-art performance when encoding user interaction into our deep models.

Paper